Architecting Real-Time Data for Continuous AI Decisions

The Importance of Real-Time Data in Modern Business

In today's fast-paced business environment, the ability to make decisions based on real-time data is no longer a luxury but a necessity. Real-time data architectures enable organizations to respond instantly to market changes, customer behaviors, and operational anomalies. This immediate responsiveness can be the difference between capitalizing on an opportunity and missing it entirely.

Real-time data provides businesses with up-to-the-minute insights that drive agile decision-making. For instance, retailers can adjust inventory levels based on current sales data, while financial institutions can detect and respond to fraudulent activities as they occur. The value of real-time data lies in its ability to provide a continuous stream of actionable insights, ensuring that businesses remain competitive and efficient in an ever-evolving landscape.

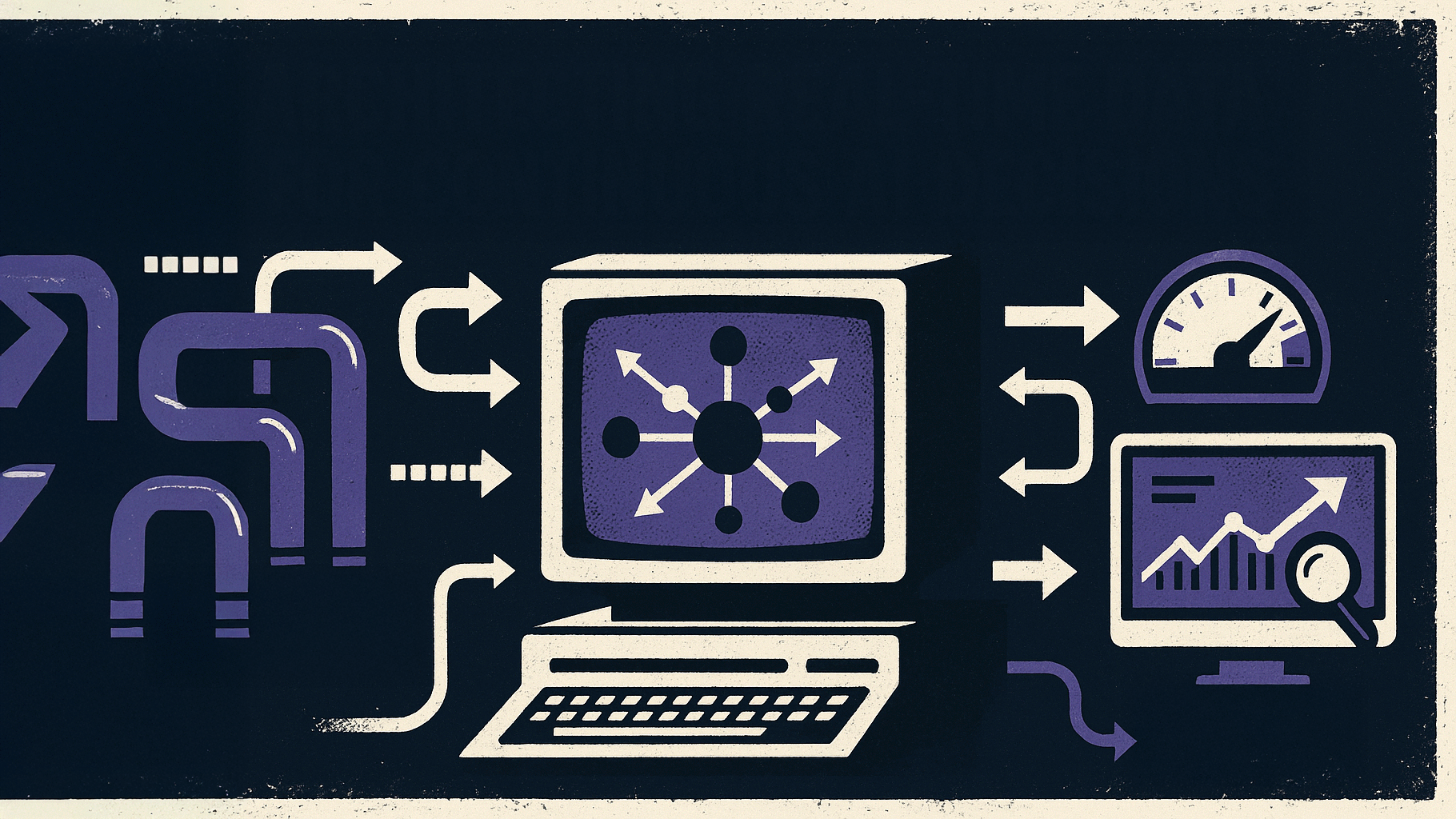

Building a Robust Streaming Ingestion System

A robust streaming ingestion system is the backbone of any real-time data architecture. It involves the continuous collection of data from various sources, such as IoT devices, social media feeds, transaction logs, and more. This data must be ingested in a manner that ensures minimal latency and maximum reliability.

To build an effective streaming ingestion system, organizations can leverage technologies like Apache Kafka, AWS Kinesis, and Google Cloud Pub/Sub. These platforms facilitate the real-time ingestion of high-velocity data, ensuring that data streams are processed and available for analysis without delay. Additionally, implementing data buffering and partitioning strategies can help manage load spikes and ensure data consistency.

Achieving Low-Latency Data Processing

Low-latency data processing is critical for transforming ingested data into actionable insights instantaneously. Achieving low latency involves optimizing the entire data processing pipeline, from ingestion to analysis.

One way to ensure low-latency processing is by using in-memory data stores like Apache Flink or Spark Streaming. These technologies enable real-time processing by keeping data in memory, thus reducing the time it takes to read and write data from disk. Additionally, employing parallel processing techniques and optimizing algorithms for speed can further reduce latency.

It's also essential to monitor and fine-tune the performance of the data processing pipeline continuously. This includes identifying and addressing bottlenecks, optimizing resource allocation, and ensuring that the system can scale to handle increasing data volumes.

Creating Real-Time Analytics Dashboards

Real-time analytics dashboards are the front-end interfaces that allow decision-makers to visualize and interact with real-time data. These dashboards provide a consolidated view of key performance indicators (KPIs), trends, and anomalies, enabling swift and informed decision-making.

To create effective real-time analytics dashboards, organizations can use tools like Tableau, Power BI, and Google Data Studio. These platforms support real-time data integration and offer a wide range of visualization options. It's crucial to design dashboards that are intuitive and customizable, allowing users to drill down into specific data points and receive alerts for critical events.

Additionally, incorporating advanced analytics and AI capabilities into dashboards can provide deeper insights. For example, predictive analytics can forecast future trends, while anomaly detection algorithms can highlight unusual patterns that require attention.

Integrating AI with Real-Time Data for Instant Decisions

Integrating AI with real-time data architectures enables organizations to automate decision-making processes and respond to events as they happen. AI algorithms can analyze vast amounts of data quickly, identifying patterns and generating insights that would be impossible for humans to discern in real-time.

Machine learning models can be trained on historical data and then applied to real-time data streams to provide instant recommendations. For example, in the healthcare industry, AI can analyze patient data in real-time to predict and prevent adverse events. Similarly, in finance, AI can detect fraudulent transactions and trigger immediate interventions.

To successfully integrate AI with real-time data, it's essential to have a robust infrastructure that supports the deployment and scaling of AI models. This includes leveraging cloud-based AI platforms like Google Cloud's Vertex AI, which offers tools for building, training, and deploying machine learning models at scale.

Case Study: Bayer's Use of AlloyDB for Efficient Data Processing

Bayer Crop Science provides a compelling example of how real-time data architectures can transform business operations. To support their agricultural advancements, Bayer needed a solution to efficiently process and analyze billions of data points collected from various sources, including satellite imagery and environmental data.

Bayer implemented AlloyDB for PostgreSQL, a cloud-native relational database service that offers low-latency processing and seamless scalability. This solution allowed Bayer to centralize their data processing, reducing response times by over 50% and increasing processing capacity by fivefold.

With AlloyDB, Bayer's research and development teams can access real-time insights, enabling them to make data-driven decisions quickly. This has led to improved operational efficiency, enhanced collaboration, and better outcomes in their agricultural initiatives.

Best Practices for Maintaining Data Quality and Security

Maintaining data quality and security is paramount in a real-time data architecture. Poor data quality can lead to inaccurate insights, while security breaches can compromise sensitive information and erode trust.

To ensure data quality, organizations should implement robust data governance frameworks that include data validation, cleansing, and enrichment processes. Regular audits and monitoring can help identify and address data quality issues promptly. Additionally, using AI-powered data profiling tools can automate the detection of anomalies and inconsistencies.

Data security must be addressed through a multi-layered approach. This includes implementing strong access controls, encryption, and monitoring for unauthorized access. Organizations should also stay compliant with relevant data protection regulations and continuously update their security protocols to address emerging threats.

Future Trends in Real-Time Data Architecture and AI

The future of real-time data architecture and AI is poised for exciting advancements. As data volumes continue to grow, the need for more efficient and scalable solutions will drive innovation in this space.

One emerging trend is the use of edge computing, where data processing occurs closer to the source of data generation. This reduces latency and bandwidth usage, making real-time data processing more efficient. Additionally, advancements in AI, such as federated learning, will enable more secure and collaborative model training across different organizations.

Another trend is the integration of real-time data architectures with augmented reality (AR) and virtual reality (VR) technologies. This can provide immersive data visualization experiences, allowing users to interact with real-time data in novel ways.

In conclusion, real-time data architectures are essential for powering AI-driven decisions and maintaining a competitive edge in today's dynamic business environment. By adopting best practices in streaming ingestion, low-latency processing, real-time dashboards, and data quality and security, organizations can unlock the full potential of their data and drive continuous improvement.